Your Autonomous Robot is Just Hours Away

I love figuring things out. After over a decade in the Industrial IoT space, a new playground opened up to me in the form of robotics and autonomy. A bit overwhelming at first, I quickly found that the solutions being used had overlap in architectures that most developers would be comfortable in. All of the tools I was already comfortable with: Linux, multi-core processing, real network stacks, Docker, GPU acceleration running all on the edge while flying! With this in mind, and a new problem set to solve around robotics and enabling autonomy, I’ve put together this high level primer to help others build their own autonomous drones.

To start, we must break down essential robotic functions into three parts: sense, think and act. These three cognitive behaviors are essential to the success of the robot as they enable it with sight, autonomy and agility.

Sense - Multiple Image Sensors for GPS-Denied Navigation and Obstacle Avoidance

Sight is one of the most important human senses because it gives context to our environment with the highest amount of bandwidth. For the majority of robot history, most robots have lacked this sense and have been limited to ‘feeling out’ their location and motion with lower throughput sensors like IR sensors, accelerometers and gyros. Thanks to the cell phone industry, we now have access to very high performance edge devices built to put dog ear overlays on your face (which also happens to be great for adding perception to your robot). By combining the ever growing variety of image sensors with size, weight and power (SWaP) optimized multi-core, GPU enabled edge devices, it is now very easy to add perception to your robot. We’ll focus on the small unmanned aircraft system (sUAS) use case, or the drones you commonly see flying around, and how it’s quick to add state of the art sensing to your vehicle.

There are a variety of image sensors that provide different capabilities, and by combining them, you can achieve higher levels of autonomy for your drone. Time-of-Flight (ToF) and stereo sensors can capture high resolution depth data from the environment to detect how far away something is. A fisheye lense combined with a VGA sensor can capture raw data used for localization in GPS-denied environments. A 4K high-resolution sensor can produce 30FPS video that enables the drone with the data it needs to classify objects in the environment. When paired with our VOXLⓇ or VOXL 2 companion computer, these sensors enable drones to make intelligent decisions autonomously based on their three dimensional, and often dynamic, surroundings. If a drone sees something unusual on its course, it can choose a different route. If a drone sees something it’s been trained to detect, it can react accordingly.

|

|

Time of Flight (ToF) real time depth sensing and mapping.

|

As you build your robot/drone, you might use different combinations of image sensors depending on your environment. The VOXL®, VOXL Flight and VOXL 2 support a variety of image sensor configurations. Listed below is an example that shows how you could achieve image classification, a high-resolution depth map and location tracking:

| Use case | Interface 0 | Interface 1 | Interface 2 |

| Obstacle avoidance, GPS denied navigation, image classification / FPV | High-res 4k RGB, 30Hz | Active Time of Flight Sensor | Tracking VGA B&W global shutter, up to 90Hz |

List of image sensors you can purchase:

|

High-res 4k RGB Sensor |

ModalAI 4k High-resolution Sensor (IMX214, 10x10x16mm) ModalAI 4k High-resolution Sensor (IMX214, 8x8x5.8mm) ModalAI 4k High-resolution sensor (IMX377, 12 style lens) |

|

Tracking VGA B&W global shutter |

ModalAI Tracking VGA Global Shutter Sensor and Cable (OV7251) |

|

Stereo Synchronized Sensor Pair B&W |

ModalAI Stereo VGA Global Shutter Sensor Pair (OV7251) |

|

Active Time-of-Flight Sensor |

ModalAI VOXL Time of Flight (TOF) Depth Sensor (PMD A65) |

Perceive - Think - Fly to Enable Autonomous Drones

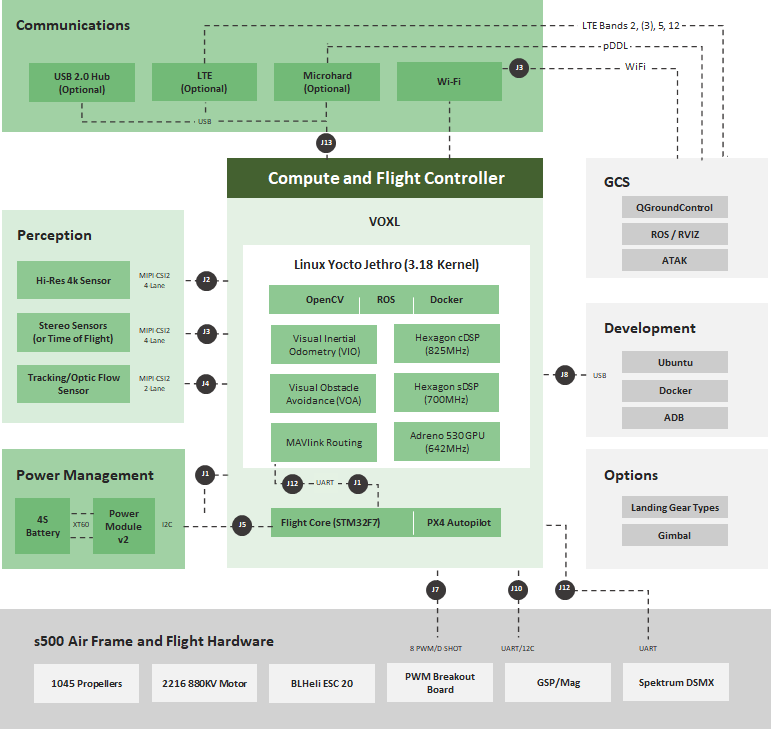

For a robot to be truly autonomous, it needs a companion computer on board that allows it to “perceive” and in turn, act on its own. The advanced image sensors can produce a lot of data, and it’s imperative to have the processing power to enable the robot to think for itself and make real-world dynamic decisions. Without a companion computer on board, real-time reaction to dynamic or unknown environments would require a human to actively control the robot and make decisions for it. Additionally, with an incorrectly specified companion computer, you might not be able to react in real-time. The software stack and development workflow is something that should be investigated, as use case requirements will more than likely drive you to conduct some onboard development. A robot with an optimized companion computer is less dependent on a human to complete missions and can conveniently offload tasks to allow the operator to focus on more important aspects of their task.

The companion computer acts as the brains of the robot, and processes the data for “sight” and adds context to it - gaining perception. In the use case of the drone, there’s another critical sub-system in play: the flight controller. This role can be fulfilled by traditional microcontrollers that embedded developers should be instantly comfortable with. Similar to how a 32-bit ARM processor communicates with sensors over SPI/I2C, the flight controller communicates with the companion computer, adding context to data like speed, direction, and altitude. Additionally, this system can actuate: through pulse width modulation (PWM) or digital interfaces, it can vary motor speed when coupled with electronic speed controllers (ESCs). These flight controllers run an auto-pilot software, for example the open source PX4 flight stack. Traditionally, a flight controller has been a separate unit, but if you can find one pre-configured with a companion computer, that can be a more efficient option.

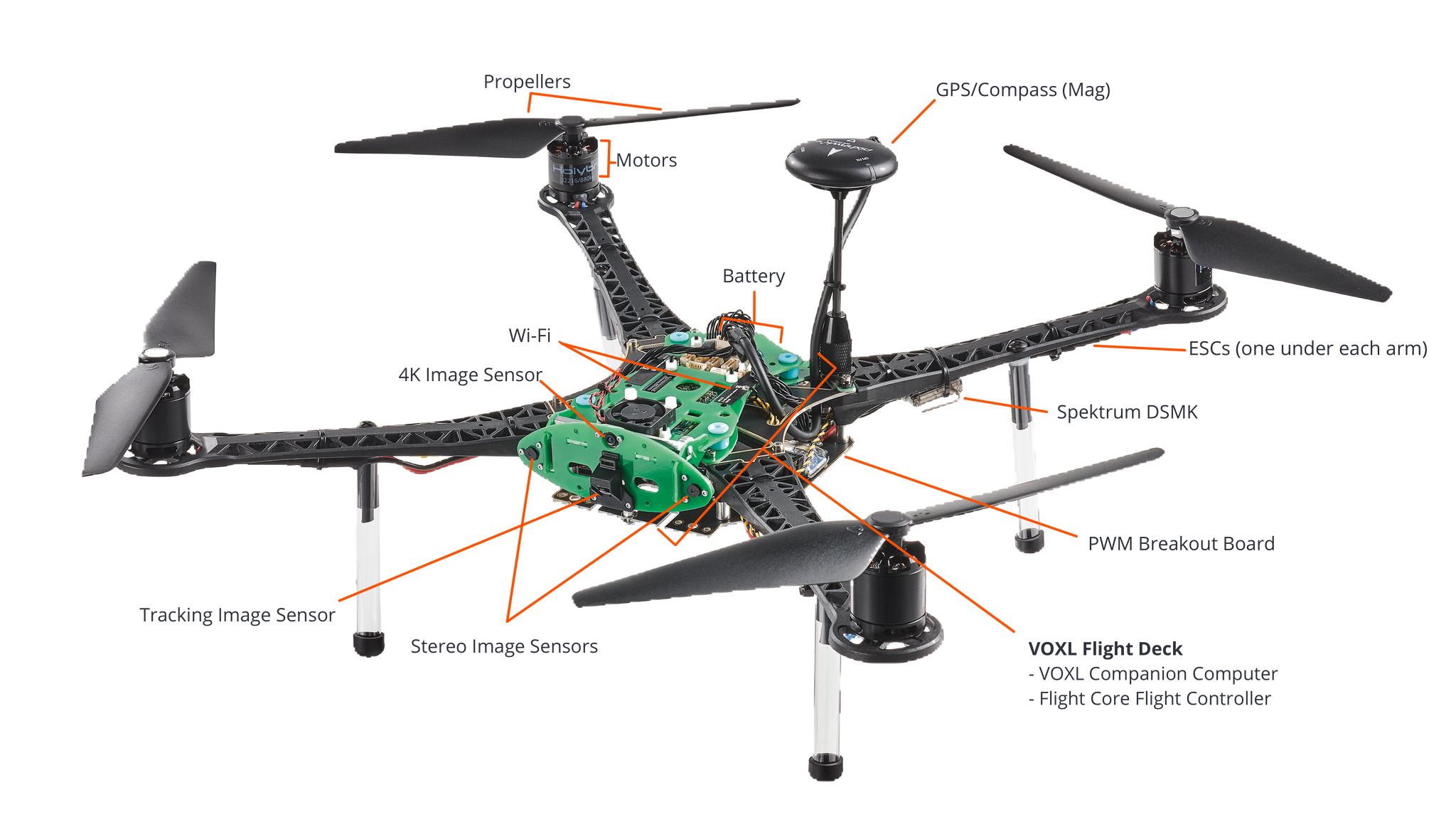

Here at ModalAIⓇ, we wanted to create a highly integrated companion computer that has everything you need for your drone to be autonomous. Most standard drones use a companion computer combined with a hodgepodge of multi-brand sensors, flight controllers, GPS modules and wireless connections. Purchasing these parts separately could run you over $1,000 and there’s no guarantee that they will fit together to create a SWaP-optimized form factor. Keeping these pain-points in mind, we created the VOXL Flight (VOXL companion computer + Flight Core flight controller), a single unit solution that is equipped with all these capabilities ready to go into one integrated PCB for a fraction of the size and cost of purchasing a la carte items.

In April 2022, we unveiled the Blue UAS Framework 2.0 autopilot, VOXL 2, which uses the latest Qualcomm QRB5165 processor combine with a PX4 autopilot to enable advanced computer vision and artificial intelligence at only 16 grams.

|

VOXL 2 - Blue UAS Framework 2.0 Autopilot. At only 16 grams, VOXL 2 boasts premier processing power from the QRB5165, 15+ TOPS, and 5G connectivity. |

|

VOXL 2 Mini - This is an even smaller and lighter autopilot, weighing only 11 grams. VOXL 2 Mini is powered by the Qualcomm QRB5165 and is designed with a 30.5x30.5mm industry standard mount for to power the smallest drones. |

Act - Let’s Build a Drone and Fly!

Moving on from the ‘brain’ of the system, the frame, ESCs, wiring, and motors make up the body and house the advanced compute platform and integrated sensors. A hobbyist comfortable with basic wiring and soldering should feel comfortable in this step. You can design the body according to how you intend to use the drone: it can be sophisticated with premium parts for professional use or simple with basic parts for hobbyist use. Our m500 is a development drone you can use as a reference design for your build.

This off the shelf platform is a great, inexpensive way to get started. Of course, autonomous robots come in various shapes and sizes. If you are looking to build a ground robot like a rover or submarine, you can easily replace parts of the body accordingly. Here is a list of parts the m500 uses to complete the body:

|

Air Frame The frame is the skeleton of your drone that holds everything in place. It’s important to find one that is light in form factor and suitable for your flying conditions, and specified correctly to support carrying the power needed for flight time and payload requirements. |

|

|

Motors You need to ensure you have the correct thrust-to-weight ratio again to achieve your desired flight times and performance. |

2216 880KV Motors |

|

Electronic Speed Control You’ll want to specify ESCs that handle your current requirements, based on motor types, propellers, and communications interface. |

|

|

Communications (WiFi/LTE/Microhard/5G) Although we’re striving for autonomy, there are many reasons for requiring a communications link, most typically to a Ground Control Station. Having an advanced computer available, we can leverage the use of 4G LTE, 5G and Microhard connections. Additionally, Wi-Fi is typically available. |

|

|

Radio Transmitter and Receiver Use these to communicate with and control your drone during testing. |

|

|

Propeller Need 4, 2 clockwise, 2 counter clockwise |

1045 Propeller |

|

Battery and Charger There are different battery types, denoted most typically by cell count and capacity. This directly relates to power/thrust and flight times. |

|

|

Power Distribution Board A common item used to evenly distribute power from your battery to the various components. In this example, it is built into the frame. |

VOXL Power Module v3 (supplies up to 30W for intensive edge computation) |

You Can Do It!

The number of use cases for autonomous drones is exploding. ModalAI is committed to enabling the builders in various industries to achieve the core functionality that will take their product or service to the next level. Innovators are focused on elevating different types of vehicles by enabling them with the ability to sense, think and act. The drone enthusiasts at ModalAI have done the hard work and assembled single-unit companion computers integrated with image sensors to make your next build seamless. Whether you are using drones for personal or enterprise applications, our product line ranging from made in t he USA PCBs to fully built, ready-to-fly drones will help you get your project started quickly and affordably.

- Starling 2 Max is the newest development drone powered by VOXL 2. Starling 2 Max is NDAA-compliant, can carry up to 500g of additional payload, and flies for 55 minutes.

- Starling 2 is ModalAI's lightest development drone to date. Weighing in at only 270g, the Starling 2 is NDAA-compliant, flies for 40+ minutes of flight time, and is powered by VOXL 2.

- VOXL 2 Sentinel is fully built, ready to fly out of the box powered by the VOXL 2 autopilot.

- VOXL 2 Flight Deck is perfect for those who want to customize their own frame. The Flight Deck is already assembled with the processing power, cables, regulators and integrated vision sensors your drone needs; saving you hours of work. Simply mount onto the frame and watch your drone take flight

- VOXL 2 is the newest Blue UAS Framework 2.0 autopilot. At only 16 grams, VOXL 2 boasts premium processing power from the QRB5165, 15+ TOPs, and 5G connectivity

- VOXL 2 Mini is an 11g companion computer and flight controller that is smaller than an Oreo! VOXL 2 Mini is powered by the Qualcomm QRB5165 and features an industry standard 30.5x30.5mm mount, perfect for FPV drones

- Flight Core is our very own flight controller that will take your vehicle to new heights

At ModalAI, we love solving the many challenges of robotic autonomy and building up the functional blocks that allow others to jumpstart their projects. We focus on creating usable, documented and easily integrated systems. We are building development workflows and tools and making them open source. Is it possible for you to enable your robot with autonomy? Yes you can! With ModalAI development kits and software stacks, it’s possible in a few hours.

Ready to activate autonomy? Visit ModalAI.com to get started or contact us at contact@modalai.com